The Chernoff inequality (or Chernoff bound) is used in probability to determine the exponentially decreasing upper bound on the tail of a random variable based on its moment-generating function. It does not refer to a particular form of inequality but the technique used to determine this bound.

Among its many forms, the multiplicative form of the Chernoff inequality bounds the error relative to the mean.

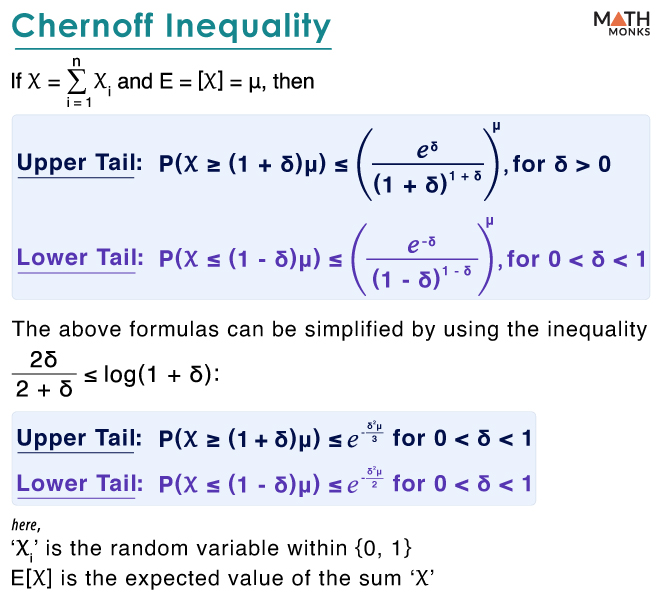

Mathematically, it is written as:

If ${X=\sum ^{n}_{i=1}X_{i}}$, where ‘Xi’ is the random variable within {0, 1} and the expected value of the sum is E(X) = μ, then

- Upper Tail: ${P( X\geq \left( 1+\delta \right) \mu ) \leq \left( \dfrac{e^{\delta }}{\left( 1+\delta \right) ^{1+\delta }}\right) ^{\mu }}$, for δ > 0

- Lower Tail: ${P( X\leq \left( 1-\delta \right) \mu ) \leq \left( \dfrac{e^{-\delta }}{\left( 1-\delta \right) ^{1-\delta }}\right) ^{\mu }}$, for 0 < δ < 1

The above formula can be simplified by following the inequality ${\dfrac{2\delta }{2+\delta }\leq \log \left( 1+\delta \right)}$:

- Upper Tail: ${P( X\geq \left( 1+\delta \right) \mu ) \leq e^{-\dfrac{\delta ^{2}\mu }{2+\delta }}}$, for δ > 0. Now, if we restrict the value as 0 < δ < 1, then Chernoff bound yields ${P( X\geq \left( 1+\delta \right) \mu ) \leq e^{-\dfrac{\delta ^{2}\mu }{3}}}$

- Lower Tail: ${P( X\leq \left( 1-\delta \right) \mu ) \leq e^{-\dfrac{\delta ^{2}\mu }{2}}}$, for 0 < δ < 1

Proof

For Upper Tail

By Chebyshev and Markov’s inequalities, we get

${P( X > \left( 1+\delta \right) \mu ) =P( e^{tX} > e^{t\left( 1+\delta \right) \mu })}$

${\leq \dfrac{E\left( e^{tX}\right) }{e^{t\left( 1+\delta \right) \mu }}}$, where the optimal value t > 0 …..(i)

Now, let us consider no values are 0 or 1.

Since each ‘Xi’ is independent, we have

${E\left( e^{tX}\right)}$

= ${E\left( e^{t\left( X_{1}+X_{2}+\ldots +X_{n}\right) }\right)}$

= ${E\left( \prod ^{n}_{i=1}e^{tX_{i}}\right)}$

= ${\prod ^{n}_{i=1}E\left( e^{tX_{i}}\right)}$

Computing E(tXi) with the random variable ‘et’ for the probability ‘pi’ and 1 for the probability (1 – pi)

Thus, ${\prod ^{n}_{i=1}E\left( e^{tX_{i}}\right)}$ = ${\prod ^{n}_{i=1}\left( 1+p_{i}\left( e^{t}-1\right) \right)}$

Since 1 + x < ex for all x > 0 and if at least one pi > 0, then

${\prod ^{n}_{i=1}( 1+p_{i}\left( e^{t}-1\right) <\prod ^{n}_{i=1}e^{p_{i}\left( e^{t}-1\right) }=e^{\left( P_{1}+P_{2}+\ldots +P_{n}\right) \left( e^{t}-1\right) }}$

Since ${\sum ^{n}_{i=1}p_{i}=E\left( X\right) =\mu}$, we get

${\prod ^{n}_{i=1}( 1+p_{i}\left( e^{t}-1\right) <e^{\left( e^{t}-1\right) \mu }}$ …..(ii)

Using (ii) in (i), we get

${P( X > \left( 1+\delta \right) \mu )\leq \dfrac{e^{\left( e^{t}-1\right) \mu }}{e^{t\left( 1+\delta \right) \mu }}}$ …..(iii)

Now, minimizing the value of ‘t’ through the differentiation with respect to ‘t,’ we get

${\dfrac{d}{dt}\left( e^{\mu \left( e^{t}-1-t-t\delta \right) }\right) =0}$

⇒ ${e^{\mu \left( e^{t}-1-t-t\delta \right) }\left( e^{t}-1-\delta \right) =0}$

⇒ et = 1 + δ

Thus, the minimum value of ‘t’ is ln(1 + δ)

By substituting t = ln(1 + δ) into the expression (iii), we get

${P( X\geq \left( 1+\delta \right) \mu ) \leq \left( \dfrac{e^{\delta }}{\left( 1+\delta \right) ^{1+\delta }}\right) ^{\mu }}$, which is the general formula.

Now, rewriting the R.H.S of the above expression, we get

${P( X\geq \left( 1+\delta \right) \mu ) \leq \left( e^{\delta -\left( 1+\delta \right) \ln \left( 1+\delta \right) }\right) ^{\mu }}$ …..(iv)

From Taylor’s expansion,

${\ln \left( 1+\delta \right) =\sum ^{\infty }_{k=1}\dfrac{\left( -1\right) ^{k+1}\delta ^{k}}{k}}$

⇒ ${\left( 1+\delta \right) \ln \left( 1+\delta \right) =\delta +\sum ^{\infty }_{k=2}\dfrac{\left( -1\right) ^{k+1}\delta ^{k}}{k}+\dfrac{\sum ^{\infty }\left( -1\right) ^{k}\delta ^{k}}{k}=2^{k-1}}$

⇒ ${\left( 1+\delta \right) \ln \left( 1+\delta \right) =\delta +\sum ^{\infty }_{k=2}\left( -1\right) ^{k}\delta ^{k}\left( \dfrac{1}{k-1}-\dfrac{1}{k}\right)}$

Now, considering 0 < δ < 1, we have

${\left( 1+\delta \right) \ln \left( 1+\delta \right) \geq \delta +\dfrac{\delta ^{2}}{2}-\dfrac{\delta ^{3}}{6}\geq \delta +\dfrac{\delta ^{2}}{2}-\dfrac{\delta ^{2}}{6}=\delta +\dfrac{\delta ^{2}}{3}}$

From (iv),

${P( X\geq \left( 1+\delta \right) \mu ) \leq e^{\delta -\left( \delta +\dfrac{\delta ^{2}}{3}\right) \mu }}$

⇒ ${P( X\geq \left( 1+\delta \right) \mu ) \leq e^{-\dfrac{\delta ^{2}\mu }{3}}}$

Thus, Chernoff’s inequality for upper tail bounds is proved.

For Lower Tail

To prove for the lower tail, we use the logarithmic expression ${\ln \left( 1-x\right) =-x-\dfrac{x^{2}}{2}-\dfrac{x^{3}}{3}-\ldots}$

If δ ≤ 1, we have

${\ln \left( 1-\delta \right) >-\delta -\dfrac{\delta ^{2}}{2}}$

Now, by raising the power of both sides to (1 – δ) and dropping the highest-order terms, we get

${\left( 1-\delta \right) ^{1-\delta } >e^{-\delta +\dfrac{\delta ^{2}}{2}}}$

Now, considering 0 < δ < 1, we have

${P( X\leq \left( 1-\delta \right) \mu ) \leq e^{-\dfrac{\delta ^{2}\mu }{2}}}$, for 0 < δ < 1

Thus, Chernoff’s inequality for lower tail bounds is proved.