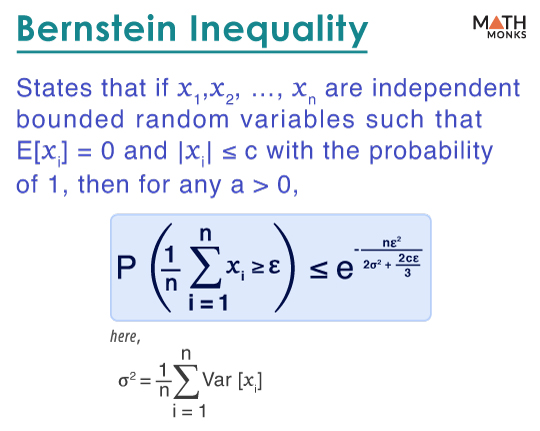

Bernstein inequality states that if ‘x1,’ ‘x2,’ …, ‘xn’ are independent bounded random variables such that E[xi] = 0 and |xi| ≤ c with the probability of 1, then for any a > 0,

${P( \dfrac{1}{n}\sum ^{n}_{i=1}x_{i}\geq \varepsilon ) \leq e^{-\dfrac{n\varepsilon ^{2}}{2\sigma ^{2}+\dfrac{2c\varepsilon }{3}}}}$

Here, σ2 = ${\dfrac{1}{n}\sum ^{n}_{i=1}Var\left\{ x_{i}\right\}}$

Proof

Let us consider ${F_{i}=\sum ^{\infty }_{r=2}\dfrac{S^{r-2}E\left[ x_{i}^{r}\right] }{r!\sigma _{i}^{2}}}$, here, ${\sigma _{i}^{2}=E\left[ x_{i}^{2}\right]}$

Now, ${e^{x}=1+x+\sum ^{\infty }_{r=2}\dfrac{E\left[ x_{i}^{r}\right] }{r!}}$

⇒ ${E\left[ e^{sx_{i}}\right] =1+sE\left[ x_{i}\right] +\sum ^{\infty }_{r=2}\dfrac{E\left[ x_{i}^{r}\right] }{r!}}$

Since E[xi] = 0, we get ${E\left[ e^{sx_{i}}\right] =1+F_{i}s^{2}\sigma _{i}^{2}\leq e^{F_{i}s^{2}\sigma _{i}^{2}}}$

Now, let us consider the term ${E\left[ x_{i}^{r}\right]}$

Since the expectation of a function is the integral of the function with respect to a probability measure, then ${E\left[ x_{i}^{r}\right] =\int _{P}x_{i}^{r-1}x_{i}}$

By Schwarz’s inequality, we get

${E\left[ x_{i}^{r}\right] =\int _{P}x_{i}^{r-1}x_{i}\leq \left( \int _{P}\left| x_{i}^{r-1}\right| ^{2}\right) ^{\dfrac{1}{2}}\left( \int _{P}\left| x_{i}\right| ^{2}\right) ^{\dfrac{1}{2}}}$

⇒ ${E\left[ x_{i}^{r}\right] \leq \sigma _{i}\left( \int _{P}\left| x_{i}^{r-1}\right| ^{2}\right) ^{\dfrac{1}{2}}}$

Using Schwarz’s inequality recursively ‘n’ times, we get

${E\left[ x_{i}^{r}\right] \leq \sigma _{i}^{1+\dfrac{1}{2}+\left( \dfrac{1}{2}\right) ^{2}+\ldots +\left( \dfrac{1}{2}\right) ^{n-1}}\left( \int _{P}\left| x_{i}^{\left( 2^{n}r-2^{n+1}-1\right)}\right| \right) ^{\dfrac{1}{2^{n}}}}$

⇒ ${E\left[ x_{i}^{r}\right] \leq \left\{ \sigma _{i}^{2\left( 1-\left( \dfrac{1}{2}\right) ^{n}\right)}\left( \int _{P}\left| x_{i}^{\left( 2^{n}r-2^{n+1}-1\right)}\right| \right) ^{\dfrac{1}{2^{n}}}\right\}}$ …..(i)

Since, |xi| ≤ c, thus, we get

${\left( \int _{p}\left| x_{i}^{\left( 2^{n}r-2^{n+1}-1\right) }\right| \right) ^{\dfrac{1}{2^{n}}}\leq \left( c^{\left( 2^{n}r-2^{n+1}-1\right) }\right) ^{\dfrac{1}{2^{n}}}}$

From (i), ${E\left[ x_{i}^{r}\right] \leq \left\{ \sigma _{i}^{2\left( 1-\left( \dfrac{1}{2}\right) ^{n}\right)}c^{\left( r-2-\dfrac{1}{2^{n}}\right)} \right\}}$

Taking the limit of ‘n’ to infinity, we get

${E\left[ x_{i}^{r}\right] \leq \lim _{n\rightarrow \infty }\left\{ \sigma _{i}^{2\left( 1-\left( \dfrac{1}{2}\right) ^{n}\right)}c^{\left( r-2-\dfrac{1}{2^{n}}\right)} \right\}}$

⇒ ${E\left[ x_{i}^{r}\right] \leq \sigma _{i}^{2}c^{r-2}}$ …..(ii)

Thus, ${F_{i}=\sum ^{\infty }_{r=2}\dfrac{s^{r-2}E\left[ x_{i}^{r}\right] }{r!\sigma _{i}^{2}}\leq \sum ^{\infty }_{r=2}\dfrac{s^{r-2}\sigma _{i}^{2}c^{r-2}}{r!\sigma _{i}^{2}}}$

⇒ ${F_{i}\leq \dfrac{1}{s^{2}c^{2}}\sum ^{\infty }_{r=2}\dfrac{s^{r}c^{r}}{r!}=\dfrac{1}{s^{2}c_{i}^{2}}\left( e^{sc}-1-sc\right)}$ …..(iii)

Now, applying (iii) in (ii), we get

${E\left[ x_{i}^{r}\right] \leq e^{s^{2}\sigma _{i}^{2}\dfrac{\left( e^{sc}-1-sc\right) }{s^{2}c^{2}}}}$ …..(iv)

Since ${\sigma ^{2}=\dfrac{\sigma _{i}^{2}}{n}}$, then by Chernoff’s bound,

${P( S_{n}\geq a) \leq e^{-sa}e^{s^{2}n\sigma ^{2}\dfrac{\left( e^{sc}-1-sc\right) }{s^{2}c^{2}}}}$ …..(v)

By minimizing the R.H.S. with respect to ‘s,’ we get

${\dfrac{de^{s^{2}n\sigma ^{2}\left( \dfrac{\left( e^{sc}-1-sc\right) }{s^{2}c^{2}}\right) -sa}}{ds}=e^{s^{2}n\sigma ^{2}\left( \dfrac{\left( e^{sc}-1-sc\right) }{s^{2}c^{2}}\right) -sa}\left( n\sigma ^{2}\left( \dfrac{\left( ce^{sc}-c\right) }{c^{2}}\right) -a\right) =0}$

⇒ ${\dfrac{e^{sc}-1}{c}=\dfrac{a}{n\sigma ^{2}}}$

Thus, we have ${s=\dfrac{1}{c}\log \left( \dfrac{ac}{n\sigma ^{2}}+1\right)}$

Substituting the value of ‘s’ in (v), we get

${P( S_{n}\geq a) \leq e^{\dfrac{n\sigma ^{2}}{c^{2}}\left( \dfrac{ac}{n\sigma ^{2}}-\log \left( \dfrac{ac}{n\sigma ^{2}}+1\right) \right) -\dfrac{a}{c}\log \left( \dfrac{ac}{n\sigma ^{2}}+1\right) }}$

${\leq e^{\dfrac{n\sigma ^{2}}{c^{2}}\left( \dfrac{ac}{n\sigma ^{2}}-\log \left( \dfrac{ac}{n\sigma ^{2}}+1\right) -\dfrac{ac}{n\sigma ^{2}}\log \left( \dfrac{ac}{n\sigma ^{2}}+1\right) \right) }}$ …..(vi)

Let us consider the function H(x) = (1 + x) log(1 + x) – x

From (vi), we get

${P( S_{n}\geq a) \leq e^{-\dfrac{n\sigma ^{2}}{c^{2}}H\left( \dfrac{ac}{n\sigma ^{2}}\right) }}$ …..(vii)

Now, considering another function G(x) = ${\dfrac{x^{2}}{2+\dfrac{2x}{3}}}$ such that H(x) ≥ G(x) for all x ≥ 0

Now, applying this in (vii), we get

${P( \sum ^{n}_{i}x_{i}\geq a) \leq e^{-\dfrac{n\sigma ^{2}}{c^{2}}G\left( \dfrac{ac}{n\sigma ^{2}}\right) }}$

⇒ ${P( \sum ^{n}_{i}x_{i}\geq a) \leq e^{\dfrac{-a^{2}}{2\left( ac+3n\sigma ^{2}\right) }}}$

Let a = nϵ. Thus,

${P( \sum ^{n}_{i}x_{i}\geq n\varepsilon ) \leq e^{\dfrac{-\varepsilon ^{2}n^{2}}{2\left( n\varepsilon c+3n\sigma ^{2}\right) }}}$

⇒ ${P( \dfrac{1}{n}\sum ^{n}_{i}x_{i}\geq \varepsilon ) \leq e^{-\dfrac{n\varepsilon ^{2}}{2\sigma ^{2}+\dfrac{2c\varepsilon }{3}}}}$

Hence, Bernstein’s inequality is proved.

Alternative Form

Bernstein inequality can also be expresssed mathematically as:

If P(|xi| ≤ c) = 1 and E[xi] = μ, then for any ϵ > 0,

${P( \left| \overline{x}_{n}-\mu \right| > \varepsilon ) \leq 2\exp \left\{ -\dfrac{n\varepsilon ^{2}}{2\sigma ^{2}+\dfrac{2c\varepsilon }{3}}\right\}}$

Here, σ2 = ${\dfrac{1}{n}\sum ^{n}_{i=1}Var\left\{ x_{i}\right\}}$

Proof

Let us assume E[xi] = μ = 0

From (iv), we get

${E\left[ e^{sx_{i}}\right] \leq \exp \left\{ s^{2}\sigma _{i}^{2}\dfrac{e^{sc}-1-sc}{s^{2}c^{2}}\right\}}$

Now, ${P( \overline{x}_{n} > \varepsilon )}$

= ${P( \sum ^{n}_{i=1}x_{i} > n\varepsilon )}$

= ${P( e^{s\sum ^{n}_{i=1}x_{i}} > e^{sn\varepsilon })}$

≤ ${e^{-sn\varepsilon }E\left[ e^{s\sum ^{n}_{i=1}x_{i}}\right]}$

≤ ${e^{-sn\varepsilon }\exp \left\{ ns^{2}\sigma ^{2}\dfrac{e^{sc}-1-sc}{s^{2}c^{2}}\right\}}$, which implies

${P( \overline{x}_{n} > \varepsilon )}$ ≤ ${e^{-sn\varepsilon }E\left[ e^{s\sum ^{n}_{i=1}x_{i}}\right]}$ …..(viii)

Now, substituting the value of s = ${\dfrac{1}{c}\log \left( \dfrac{c\varepsilon }{\sigma ^{2}}+1\right)}$ in the inequality (viii), we get

${P( \overline{x}_{n} > \varepsilon ) \leq \exp \left\{ -\dfrac{n\sigma ^{2}}{c^{2}}H\left( \dfrac{c\varepsilon }{\sigma ^{2}}\right) \right\}}$

Now, considering the function H(x) = (1 + x) log(1 + x) – x, we observe that H(x) ≥ ${\dfrac{x^{2}}{2+\dfrac{2x}{3}}}$

Thus, ${P( \left| \overline{x}_{n}-\mu \right| > \varepsilon ) \leq 2\exp \left\{ -\dfrac{n\varepsilon ^{2}}{2\sigma ^{2}+\dfrac{2c\varepsilon }{3}}\right\}}$,

Thus, Bernstein’s inequality is proved.