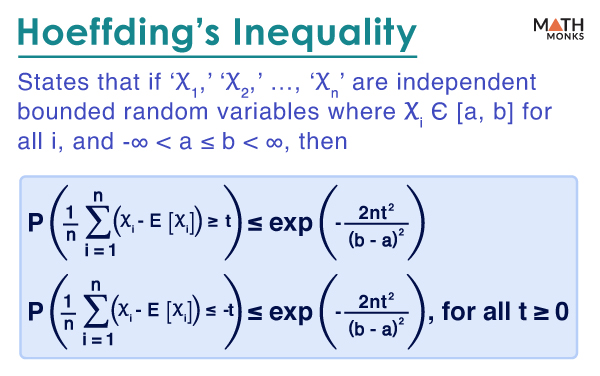

It states that if ‘X1,’ ‘X2,’ …, ‘Xn’ are bounded random variables that are independent with Xi Є [a, b] for all i, and -∞ < a ≤ b < ∞, then

${P\left( \dfrac{1}{n}\sum ^{n}_{i=1}\left( X_{i}-E\left[ X_{i}\right] \right) \geq t\right) \leq \exp \left( \dfrac{-2nt^{2}}{\left( b-a\right) ^{2}}\right)}$ and

${P\left( \dfrac{1}{n}\sum ^{n}_{i=1}\left( X_{i}-E\left[ X_{i}\right] \right) \leq t\right) \leq \exp \left( \dfrac{-2nt^{2}}{\left( b-a\right) ^{2}}\right)}$, for all t ≥ 0

The Hoeffding Inequality gives the upper bound on the probability that the sum Sn deviates from its expected value E[Sn] by more than a certain amount. It is widely used in machine learning theory.

Proof

Using Hoeffding’s Lemma

If ‘X’ is a bounded random variable with X Є [a, b], then

${E\left[ \exp \left( \lambda \left( X-E\left[ X\right] \right) \right) \right] \leq \exp \left( \dfrac{\lambda ^{2}\left( b-a\right) ^{2}}{8}\right)}$, for all λ Є ℝ.

Now, using Jensen’s inequality, we will prove a weaker version of this lemma with a factor of 2 instead of 8.

By Jensen’s inequality, we get:

If f: ℝ → ℝ is a convex function, then f(E[X]) ≤ E[f(X)]

Let us consider f(t) = t2, and E[X] = 0, then f(E[X]) = 0, while we generally have E[X2] > 0.

In both cases, f(t) = exp(t) and f(t) = exp(-t) are convex functions.

Let us consider an independent copy (X′) of X with the same distribution, where X′ Є [a, b] and E[X′] = E[X]

Since X and X′ are independent, then

${E_{X}\left[ \exp \left( \lambda \left( X-E_{X}\left[ X\right] \right) \right) \right] =E_{X}\left[ \exp \left( \lambda \left( X-E_{X’}\left[ X’\right] \right) \right) \right] \leq E_{X}\left[ E_{X’}\exp \left( \lambda \left( X-X’\right) \right) \right]}$, where EX and EX’ denote expected values with respect to X and X′ …..(i)

Here, (i) uses Jensen’s inequality applied to f(x) = e−x

Now, we have

${E\left[ \exp \left( \lambda \left( X-E\left[ X\right] \right) \right) \right] \leq E\left[ \exp \left( \lambda \left( X-X’\right) \right) \right]}$

Since X – X’ is symmetric about zero and S Є {-1, 1} is a random sign variable, then S(X – X’) has the same distribution as that of (X – X’).

Thus, ${E_{X,X’}\left[ \exp \left( \lambda \left( X-X’\right) \right) \right]}$

= ${E_{X,X’,S}\left[ \exp \left( \lambda S\left( X-X’\right) \right) \right]}$

= ${E_{X,X’}\left[ E_{S}\left[ \exp \left( \lambda S\left( X-X’\right) \right) | X,X’\right] \right]}$

Now, generating the function on the random sign, we get

${E_{S}\left[ \exp \left( \lambda S\left( X-X’\right) \right) | X,X’\right] \leq \exp \left( \dfrac{\lambda ^{2}\left( X-X’\right) ^{2}}{2}\right)}$

By our assumption, we have |X – X’| ≤ (b – a) ⇒ (X – X’)2 ≤ (b – a)2

Thus, ${E_{X,X’}\left[ \exp \left( \lambda \left( X-X’\right) \right) \right] \leq \exp \left( \dfrac{\lambda ^{2}\left( b-a\right) ^{2}}{2}\right)}$, which is the result of Hoeffding’s lemma except with a factor of 2 instead of 8

This Hoeffding’s lemma is then used to prove Hoeffding’s theorem, proving only the upper tail since the lower tail already has a similar proof.

Now, by the Chernoff bound technique, we get

${P( \dfrac{1}{n}\sum ^{n}_{i=1}\left( X_{i}-E\left[ X_{i}\right] \right) \geq t) =P( \sum ^{n}_{i=1}( X_{i}-E\left[ x_{i}\right] \geq nt)}$

${\leq E\left[ \exp \left( \lambda \sum ^{n}_{i=1}\left( X_{i}-E\left[ X_{i}\right] \right) \right) \right] e^{-\lambda nt}}$

= ${\left( \prod ^{n}_{i=1}E\left[ e^{xcx_{i}-E\left[ x_{i}\right] }\right] e^{-\lambda nt}\right) \leq \left( \prod ^{n}_{i=1}e^{\dfrac{\lambda ^{2}\left( b-a\right) ^{2}}{8}}\right) e^{-\lambda nt}}$ …..(ii)

Here, (ii) is Hoeffding’s Lemma.

By rewriting this and minimizing over λ ≥ 0, we have

${P\left( \dfrac{1}{n}\sum ^{n}_{i=1}\left( X_{i}-E\left[ X_{i}\right] \right) \geq t\right) \leq \min _{\lambda \geq 0}\exp \left( \dfrac{n\lambda ^{2}\left( b-a\right) ^{2}}{8}-\lambda nt\right) =\exp \left( -\dfrac{2nt^{2}}{\left( b-a\right) ^{2}}\right)}$

Thus, Hoeffding’s inequality is proved.